IV. Archive Classification¶

The primary question which we pursue in this section is how one can use reproducible and replicable workflows for discovering the optimal classifications of the text groups from the Drehem texts, found in an unprovenanced archival context. We describe how we leverage existing classification models to help validate our findings.

# import necessary libraries

import pandas as pd

from tqdm.auto import tqdm

# import libraries for this section

import re

import matplotlib.pyplot as plt

# import ML models from sklearn

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.decomposition import PCA

from sklearn.naive_bayes import BernoulliNB

from sklearn.naive_bayes import GaussianNB

from sklearn import svm

# import train_test_split function

from sklearn.model_selection import train_test_split

from sklearn import metrics

1 Set up Data¶

Create a dictionary of archive categories

1.1 Labeling the Training Data¶

We will be labeling the data according to what words show up in it.

labels = dict()

labels['domesticated_animal'] = ['ox', 'cow', 'sheep', 'goat', 'lamb', '~sheep', 'equid'] # account for plural

#split domesticated into large and small - sheep, goat, lamb, ~sheep would be small domesticated animals

labels['wild_animal'] = ['bear', 'gazelle', 'mountain'] # account for 'mountain animal' and plural

labels['dead_animal'] = ['die'] # find 'die' before finding domesticated or wild

labels['leather_object'] = ['boots', 'sandals']

labels['precious_object'] = ['copper', 'bronze', 'silver', 'gold']

labels['wool'] = ['wool', '~wool']

# labels['queens_archive'] = []

Using filtered_with_neighbors.csv generated above, make P Number and id_line the new indices.

Separate components of the lemma.

words_df = pd.read_pickle('https://gitlab.com/yashila.bordag/sumnet-data/-/raw/main/part_3_words_output.p') # uncomment to read from online file

#words_df = pd.read_pickle('output/part_3_output.p') #uncomment to read from local file

data = words_df.copy()

data.loc[:, 'pn'] = data.loc[:, 'id_text'].str[-6:].astype(int)

data = data.set_index(['pn', 'id_line']).sort_index()

extracted = data.loc[:, 'lemma'].str.extract(r'(\S+)\[(.*)\](\S+)')

data = pd.concat([data, extracted], axis=1)

data = data.fillna('') #.dropna() ????

data.head()

| lemma | id_text | id_word | label | date | dates_references | publication | collection | museum_no | ftype | metadata_source_x | prof? | role? | family? | number? | commodity? | neighbors | min_year | max_year | min_month | max_month | diri_month | min_day | max_day | questionable | other_meta_chars | multiple_dates | metadata_source_y | 0 | 1 | 2 | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pn | id_line | |||||||||||||||||||||||||||||||

| 100041 | 3 | 6(diš)[]NU | P100041 | P100041.3.1 | o 1 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | Yes | No | [] | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | 6(diš) | NU | ||||

| 3 | udu[sheep]N | P100041 | P100041.3.2 | o 1 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | No | No | [] | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | udu | sheep | N | ||||

| 4 | kišib[seal]N | P100041 | P100041.4.1 | o 2 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | No | No | [] | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | kišib | seal | N | ||||

| 4 | Lusuen[0]PN | P100041 | P100041.4.2 | o 2 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | No | No | [6(diš)[]NU, udu[sheep]N, kišib[seal]N, Lusuen... | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | Lusuen | 0 | PN | ||||

| 5 | ki[place]N | P100041 | P100041.5.1 | o 3 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | No | No | [] | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | ki | place | N |

data['label'].value_counts()

o 1 46955

o 2 37850

o 3 36878

r 3 33824

o 4 32289

...

env o 10 1

o vii' 4 1

o ii 50 1

a i 9 1

seal S000081 ii 4 1

Name: label, Length: 2032, dtype: int64

for archive in labels.keys():

data.loc[data.loc[:, 1].str.contains('|'.join([re.escape(x) for x in labels[archive]])), 'archive'] = archive

data.loc[:, 'archive'] = data.loc[:, 'archive'].fillna('')

data.head()

| lemma | id_text | id_word | label | date | dates_references | publication | collection | museum_no | ftype | metadata_source_x | prof? | role? | family? | number? | commodity? | neighbors | min_year | max_year | min_month | max_month | diri_month | min_day | max_day | questionable | other_meta_chars | multiple_dates | metadata_source_y | 0 | 1 | 2 | archive | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pn | id_line | ||||||||||||||||||||||||||||||||

| 100041 | 3 | 6(diš)[]NU | P100041 | P100041.3.1 | o 1 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | Yes | No | [] | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | 6(diš) | NU | |||||

| 3 | udu[sheep]N | P100041 | P100041.3.2 | o 1 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | No | No | [] | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | udu | sheep | N | domesticated_animal | ||||

| 4 | kišib[seal]N | P100041 | P100041.4.1 | o 2 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | No | No | [] | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | kišib | seal | N | |||||

| 4 | Lusuen[0]PN | P100041 | P100041.4.2 | o 2 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | No | No | [6(diš)[]NU, udu[sheep]N, kišib[seal]N, Lusuen... | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | Lusuen | 0 | PN | |||||

| 5 | ki[place]N | P100041 | P100041.5.1 | o 3 | SSXX - 00 - 00 | SSXX - 00 - 00 | AAS 053 | Louvre Museum, Paris, France | AO 20313 | BDTNS | No | No | No | No | No | [] | 0 | 1 | False | 0 | 1 | False | False | False | BDTNS | ki | place | N |

The function get_set has a dataframe row as an input and returns a dictionary where each key is a word type like NU and PN. The values are its corresponding lemmas.

1.2 Data Structuring¶

def get_set(df):

d = {}

seals = df[df['label'].str.contains('seal')]

df = df[~df['label'].str.contains('seal')]

for x in df[2].unique():

d[x] = set(df.loc[df[2] == x, 0])

d['SEALS'] = {}

for x in seals[2].unique():

d['SEALS'][x] = set(seals.loc[seals[2] == x, 0])

return d

get_set(data.loc[100271])

{'': {''},

'MN': {'Šueša'},

'N': {'itud', 'maš', 'mu', 'mu.DU', 'udu'},

'NU': {'1(diš)', '2(diš)'},

'PN': {'Apilatum', 'Ku.ru.ub.er₃', 'Šulgisimti'},

'SEALS': {},

'SN': {'Šašrum'},

'V/i': {'hulu'},

'V/t': {'dab'}}

archives = pd.DataFrame(data.groupby('pn').apply(lambda x: set(x['archive'].unique()) - set(['']))).rename(columns={0: 'archive'})

archives.loc[:, 'set'] = data.reset_index().groupby('pn').apply(get_set)

archives.loc[:, 'archive'] = archives.loc[:, 'archive'].apply(lambda x: {'dead_animal'} if 'dead_animal' in x else x)

archives.head()

| archive | set | |

|---|---|---|

| pn | ||

| 100041 | {domesticated_animal} | {'NU': {'6(diš)'}, 'N': {'ki', 'kišib', 'udu'}... |

| 100189 | {dead_animal} | {'NU': {'1(diš)', '5(diš)-kam', '2(diš)'}, 'N'... |

| 100190 | {dead_animal} | {'NU': {'3(u)', '1(diš)', '5(diš)', '1(diš)-ka... |

| 100191 | {dead_animal} | {'NU': {'1(diš)', '4(diš)', '4(diš)-kam', '2(u... |

| 100211 | {dead_animal} | {'NU': {'1(diš)', '1(u)', '1(diš)-kam', '2(diš... |

def get_line(row, pos_lst=['N']):

words = {'pn' : [row.name]} #set p_number

for pos in pos_lst:

if pos in row['set']:

#add word entries for all words of the selected part of speech

words.update({word: [1] for word in row['set'][pos]})

return pd.DataFrame(words)

Each row represents a unique P-number, so the matrix indicates which word are present in each text.

sparse = words_df.groupby(by=['id_text', 'lemma']).count()

sparse = sparse['id_word'].unstack('lemma')

sparse = sparse.fillna(0)

sparse = pd.concat(archives.apply(get_line, axis=1).values).set_index('pn')

sparse

| ki | kišib | udu | itud | mu | ud | ga | sila | šu | mu.DU | maškim | ekišibak | zabardab | u | maš | šag | lugal | mašgal | kir | a | en | ensik | egia | igikar | ŋiri | ragaba | dubsar | mašda | saŋŋa | amar | mada | akiti | lu | ab | gud | ziga | uzud | ašgar | gukkal | šugid | ... | šaŋanla | pukutum | lagaztum | bangi | imdua | KU.du₃ | batiʾum | niŋna | sikiduʾa | gudumdum | šuhugari | šutur | gaguru | nindašura | ekaskalak | usaŋ | nammah | egizid | nisku | gara | saŋ.DUN₃ | muhaldimgal | šagiagal | šagiamah | kurunakgal | ugulaʾek | šidimgal | kalam | enkud | in | kiʾana | bahar | hurizum | lagab | ibadu | balla | šembulug | li | niŋsaha | ensi | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pn | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 100041 | 1.0 | 1.0 | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 100189 | 1.0 | NaN | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 100190 | 1.0 | NaN | 1.0 | 1.0 | 1.0 | 1.0 | NaN | 1.0 | NaN | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 100191 | 1.0 | NaN | 1.0 | 1.0 | 1.0 | 1.0 | NaN | NaN | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | 1.0 | 1.0 | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 100211 | 1.0 | NaN | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 1.0 | 1.0 | 1.0 | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 519650 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 519658 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 519792 | NaN | NaN | 1.0 | 1.0 | 1.0 | 1.0 | NaN | 1.0 | NaN | 1.0 | NaN | NaN | NaN | NaN | 1.0 | NaN | NaN | 1.0 | NaN | 1.0 | NaN | 1.0 | NaN | NaN | NaN | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 1.0 | NaN | NaN | 1.0 | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 519957 | NaN | NaN | NaN | 1.0 | NaN | NaN | NaN | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 519959 | 1.0 | 1.0 | NaN | 1.0 | NaN | NaN | NaN | 1.0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | ... | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

15139 rows × 1076 columns

sparse = sparse.fillna(0)

sparse = sparse.join(archives.loc[:, 'archive'])

sparse.loc[sparse.loc[:, 'archive'].apply(lambda x: 'domesticated_animal' in x), 'domesticated_animal'] = 1

sparse.loc[:, 'domesticated_animal'] = sparse.loc[:, 'domesticated_animal'].fillna(0)

sparse.loc[sparse.loc[:, 'archive'].apply(lambda x: 'wild_animal' in x), 'wild_animal'] = 1

sparse.loc[:, 'wild_animal'] = sparse.loc[:, 'wild_animal'].fillna(0)

sparse.loc[sparse.loc[:, 'archive'].apply(lambda x: 'dead_animal' in x), 'dead_animal'] = 1

sparse.loc[:, 'dead_animal'] = sparse.loc[:, 'dead_animal'].fillna(0)

sparse.loc[sparse.loc[:, 'archive'].apply(lambda x: 'leather_object' in x), 'leather_object'] = 1

sparse.loc[:, 'leather_object'] = sparse.loc[:, 'leather_object'].fillna(0)

sparse.loc[sparse.loc[:, 'archive'].apply(lambda x: 'precious_object' in x), 'precious_object'] = 1

sparse.loc[:, 'precious_object'] = sparse.loc[:, 'precious_object'].fillna(0)

sparse.loc[sparse.loc[:, 'archive'].apply(lambda x: 'wool' in x), 'wool'] = 1

sparse.loc[:, 'wool'] = sparse.loc[:, 'wool'].fillna(0)

sparse.head()

| ki | kišib | udu | itud | mu | ud | ga | sila | šu | mu.DU | maškim | ekišibak | zabardab | u | maš | šag | lugal | mašgal | kir | a | en | ensik | egia | igikar | ŋiri | ragaba | dubsar | mašda | saŋŋa | amar | mada | akiti | lu | ab | gud | ziga | uzud | ašgar | gukkal | šugid | ... | niŋna | sikiduʾa | gudumdum | šuhugari | šutur | gaguru | nindašura | ekaskalak | usaŋ | nammah | egizid | nisku | gara | saŋ.DUN₃ | muhaldimgal | šagiagal | šagiamah | kurunakgal | ugulaʾek | šidimgal | kalam | enkud | in | kiʾana | bahar | hurizum | lagab | ibadu | balla | šembulug | li | niŋsaha | ensi | archive | domesticated_animal | wild_animal | dead_animal | leather_object | precious_object | wool | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pn | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 100041 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal} | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100189 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {dead_animal} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| 100190 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {dead_animal} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| 100191 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {dead_animal} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

| 100211 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {dead_animal} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 |

5 rows × 1083 columns

known = sparse.loc[sparse['archive'].apply(len) == 1, :]

unknown = sparse.loc[(sparse['archive'].apply(len) == 0) | (sparse['archive'].apply(len) > 1), :]

unknown_0 = sparse.loc[(sparse['archive'].apply(len) == 0), :]

unknown.shape

(3243, 1083)

1.3 Data Exploration¶

unknown.loc[sparse['archive'].apply(len) > 1, :]

| ki | kišib | udu | itud | mu | ud | ga | sila | šu | mu.DU | maškim | ekišibak | zabardab | u | maš | šag | lugal | mašgal | kir | a | en | ensik | egia | igikar | ŋiri | ragaba | dubsar | mašda | saŋŋa | amar | mada | akiti | lu | ab | gud | ziga | uzud | ašgar | gukkal | šugid | ... | niŋna | sikiduʾa | gudumdum | šuhugari | šutur | gaguru | nindašura | ekaskalak | usaŋ | nammah | egizid | nisku | gara | saŋ.DUN₃ | muhaldimgal | šagiagal | šagiamah | kurunakgal | ugulaʾek | šidimgal | kalam | enkud | in | kiʾana | bahar | hurizum | lagab | ibadu | balla | šembulug | li | niŋsaha | ensi | archive | domesticated_animal | wild_animal | dead_animal | leather_object | precious_object | wool | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pn | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 100217 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100229 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100284 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100330 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100749 | 1.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 500210 | 1.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 507968 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 509325 | 1.0 | 0.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 512780 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {leather_object, wool} | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 |

| 512812 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {domesticated_animal, wild_animal} | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

1195 rows × 1083 columns

#find rows where archive has empty set

unknown[unknown['archive'] == set()]

| ki | kišib | udu | itud | mu | ud | ga | sila | šu | mu.DU | maškim | ekišibak | zabardab | u | maš | šag | lugal | mašgal | kir | a | en | ensik | egia | igikar | ŋiri | ragaba | dubsar | mašda | saŋŋa | amar | mada | akiti | lu | ab | gud | ziga | uzud | ašgar | gukkal | šugid | ... | niŋna | sikiduʾa | gudumdum | šuhugari | šutur | gaguru | nindašura | ekaskalak | usaŋ | nammah | egizid | nisku | gara | saŋ.DUN₃ | muhaldimgal | šagiagal | šagiamah | kurunakgal | ugulaʾek | šidimgal | kalam | enkud | in | kiʾana | bahar | hurizum | lagab | ibadu | balla | šembulug | li | niŋsaha | ensi | archive | domesticated_animal | wild_animal | dead_animal | leather_object | precious_object | wool | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pn | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 100292 | 1.0 | 0.0 | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100301 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100375 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100376 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 100377 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 519647 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 519650 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 519658 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 519957 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 519959 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

2048 rows × 1083 columns

known_copy = known

known_archives = [known_copy['archive'].to_list()[i].pop() for i in range(len(known_copy['archive'].to_list()))]

known_archives

['domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'precious_object',

'precious_object',

'precious_object',

'precious_object',

'wool',

'wool',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'leather_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'wool',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'leather_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'wild_animal',

'wild_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'wool',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'precious_object',

'domesticated_animal',

'precious_object',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'precious_object',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'precious_object',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'precious_object',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'wild_animal',

'precious_object',

'dead_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'precious_object',

'domesticated_animal',

'dead_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'domesticated_animal',

'dead_animal',

'wild_animal',

'domesticated_animal',

'domesticated_animal',

...]

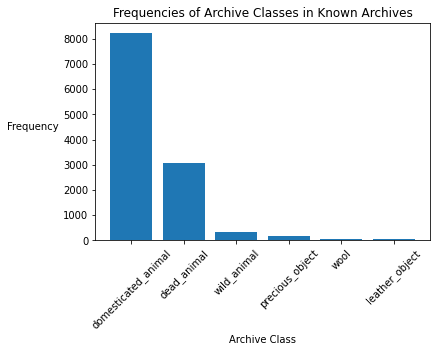

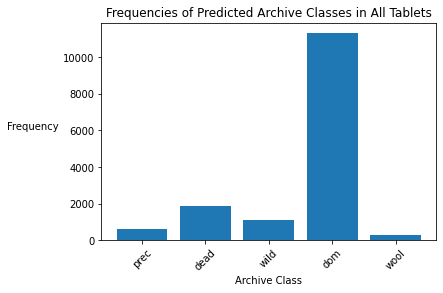

known['archive_class'] = known_archives

archive_counts = known['archive_class'].value_counts()

plt.xlabel('Archive Class')

plt.ylabel('Frequency', rotation=0, labelpad=30)

plt.title('Frequencies of Archive Classes in Known Archives')

plt.xticks(rotation=45)

plt.bar(archive_counts.index, archive_counts);

percent_domesticated_animal = archive_counts['domesticated_animal'] / sum(archive_counts)

print('Percent of texts in Domesticated Animal Archive:', percent_domesticated_animal)

Percent of texts in Domesticated Animal Archive: 0.6905682582380632

known.shape

(11896, 1084)

words_df_copy = words_df.copy()

words_df_copy['id_text'] = [int(pn[1:]) for pn in words_df_copy['id_text']]

grouped = words_df_copy.groupby(by=['id_text']).first()

grouped = grouped.fillna(0)

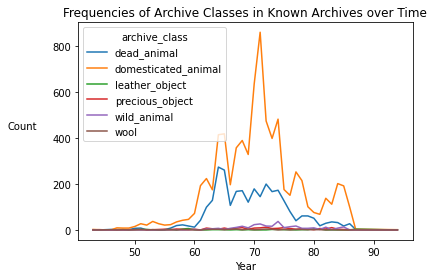

known_copy = known.copy()

known_copy['year'] = grouped.loc[grouped.index.isin(known.index),:]['min_year']

year_counts = known_copy.groupby(by=['year', 'archive_class'], as_index=False).count().set_index('year').loc[:, 'archive_class':'ki']

year_counts_pivoted = year_counts.pivot(columns='archive_class', values='ki').fillna(0)

year_counts_pivoted.drop(index=0).plot();

plt.xlabel('Year')

plt.ylabel('Count', rotation=0, labelpad=30)

plt.title('Frequencies of Archive Classes in Known Archives over Time');

known

| ki | kišib | udu | itud | mu | ud | ga | sila | šu | mu.DU | maškim | ekišibak | zabardab | u | maš | šag | lugal | mašgal | kir | a | en | ensik | egia | igikar | ŋiri | ragaba | dubsar | mašda | saŋŋa | amar | mada | akiti | lu | ab | gud | ziga | uzud | ašgar | gukkal | šugid | ... | sikiduʾa | gudumdum | šuhugari | šutur | gaguru | nindašura | ekaskalak | usaŋ | nammah | egizid | nisku | gara | saŋ.DUN₃ | muhaldimgal | šagiagal | šagiamah | kurunakgal | ugulaʾek | šidimgal | kalam | enkud | in | kiʾana | bahar | hurizum | lagab | ibadu | balla | šembulug | li | niŋsaha | ensi | archive | domesticated_animal | wild_animal | dead_animal | leather_object | precious_object | wool | archive_class | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| pn | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| 100041 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | domesticated_animal |

| 100189 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | dead_animal |

| 100190 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | dead_animal |

| 100191 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | dead_animal |

| 100211 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | dead_animal |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 514376 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | domesticated_animal |

| 517184 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | {} | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | dead_animal |

| 519457 | 1.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | domesticated_animal |

| 519521 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | wild_animal |

| 519792 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | {} | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | domesticated_animal |

11896 rows × 1084 columns

#run to save the prepared data

known.to_csv('output/part_4_known.csv')

known.to_pickle('output/part_4_known.p')

unknown.to_csv('output/part_4_unknown.csv')

unknown.to_pickle('output/part_4_unknown.p')

unknown_0.to_csv('output/part_4_unknown_0.csv')

unknown_0.to_pickle('output/part_4_unknown_0.p')

#known = pd.read_pickle('https://gitlab.com/yashila.bordag/sumnet-data/-/raw/main/part_4_known.p')

#unknown = pd.read_pickle('https://gitlab.com/yashila.bordag/sumnet-data/-/raw/main/part_4_unknown.p')

#unknown_0 = pd.read_pickle('https://gitlab.com/yashila.bordag/sumnet-data/-/raw/main/part_4_unknown_0.p')

model_weights = {}

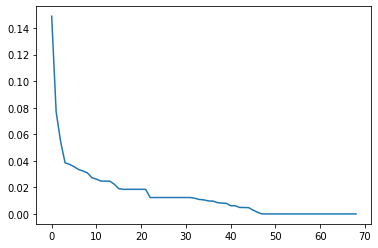

1.3.1 PCA/Dimensionality Reduction¶

Here we perform PCA to find out more about the underlying structure of the dataset. We will analyze the 2 most important principle components and explore how much of the variation of the known set is due to these components.

#PCA

pca_archive = PCA()

principalComponents_archive = pca_archive.fit_transform(known.loc[:, 'AN.bu.um':'šuʾura'])

principal_archive_Df = pd.DataFrame(data = principalComponents_archive

, columns = ['principal component ' + str(i) for i in range(1, 1 + len(principalComponents_archive[0]))])

len(known.loc[:, 'AN.bu.um':'šuʾura'].columns)

69

principal_archive_Df

| principal component 1 | principal component 2 | principal component 3 | principal component 4 | principal component 5 | principal component 6 | principal component 7 | principal component 8 | principal component 9 | principal component 10 | principal component 11 | principal component 12 | principal component 13 | principal component 14 | principal component 15 | principal component 16 | principal component 17 | principal component 18 | principal component 19 | principal component 20 | principal component 21 | principal component 22 | principal component 23 | principal component 24 | principal component 25 | principal component 26 | principal component 27 | principal component 28 | principal component 29 | principal component 30 | principal component 31 | principal component 32 | principal component 33 | principal component 34 | principal component 35 | principal component 36 | principal component 37 | principal component 38 | principal component 39 | principal component 40 | principal component 41 | principal component 42 | principal component 43 | principal component 44 | principal component 45 | principal component 46 | principal component 47 | principal component 48 | principal component 49 | principal component 50 | principal component 51 | principal component 52 | principal component 53 | principal component 54 | principal component 55 | principal component 56 | principal component 57 | principal component 58 | principal component 59 | principal component 60 | principal component 61 | principal component 62 | principal component 63 | principal component 64 | principal component 65 | principal component 66 | principal component 67 | principal component 68 | principal component 69 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -0.001153 | -0.000651 | -0.000559 | -0.000524 | 0.00003 | 0.000357 | -0.000339 | -0.000428 | -0.000424 | -0.000372 | -0.000382 | -5.157586e-17 | 2.706112e-15 | -0.000481 | -0.000306 | 0.000033 | -3.429768e-16 | 5.923137e-17 | 4.357782e-17 | -2.465750e-16 | 2.860153e-16 | -0.000624 | 3.966646e-17 | 2.603275e-16 | -4.583354e-17 | -2.646101e-17 | 8.451599e-18 | -3.045379e-17 | 1.346336e-17 | 9.688025e-17 | 1.936758e-17 | -0.00051 | 0.000105 | -0.000049 | -0.000055 | -0.000027 | -0.000113 | -0.000011 | -0.000028 | -0.000117 | -1.617357e-16 | -0.000085 | -0.000069 | -0.000107 | 0.000015 | -0.000031 | -0.000042 | 1.970750e-15 | 1.869525e-17 | 9.415297e-16 | 2.019903e-15 | -1.169278e-18 | 1.894373e-20 | -7.211354e-21 | -2.128106e-20 | 1.746307e-21 | -2.687171e-20 | -2.849700e-20 | 2.445157e-21 | 1.963535e-20 | 3.486655e-21 | -2.377182e-22 | 1.506376e-20 | 1.319668e-21 | -2.913316e-23 | 4.035962e-23 | -2.844907e-23 | 5.776068e-23 | -3.962093e-21 |

| 1 | -0.001153 | -0.000651 | -0.000559 | -0.000524 | 0.00003 | 0.000357 | -0.000339 | -0.000428 | -0.000424 | -0.000372 | -0.000382 | 1.564922e-16 | 2.649608e-15 | -0.000481 | -0.000306 | 0.000033 | -2.727474e-16 | -3.278168e-17 | -2.041174e-17 | 1.211487e-16 | 4.041074e-17 | -0.000624 | 1.172185e-16 | 2.181246e-17 | 3.816922e-17 | -1.415496e-17 | -8.185121e-17 | 1.835050e-16 | -2.723499e-16 | 1.982747e-16 | -1.303244e-16 | -0.00051 | 0.000105 | -0.000049 | -0.000055 | -0.000027 | -0.000113 | -0.000011 | -0.000028 | -0.000117 | -6.575466e-17 | -0.000085 | -0.000069 | -0.000107 | 0.000015 | -0.000031 | -0.000042 | 9.619220e-15 | -6.600049e-17 | -1.429446e-16 | -1.916333e-16 | -4.540458e-17 | 3.457458e-19 | -6.477865e-19 | 3.464390e-19 | -1.141279e-19 | 2.092593e-19 | 1.045044e-18 | 1.123598e-18 | -1.462768e-18 | 1.294085e-18 | 4.663841e-19 | -3.597813e-19 | -4.046188e-20 | -1.200163e-20 | -1.178408e-20 | -6.275206e-21 | -1.761853e-20 | 6.692983e-20 |

| 2 | -0.001153 | -0.000651 | -0.000559 | -0.000524 | 0.00003 | 0.000357 | -0.000339 | -0.000428 | -0.000424 | -0.000372 | -0.000382 | 1.535254e-16 | 2.658846e-15 | -0.000481 | -0.000306 | 0.000033 | -2.825484e-16 | -3.280272e-17 | -2.539356e-17 | 1.231791e-16 | 4.753436e-17 | -0.000624 | 1.205493e-16 | 2.333757e-17 | 3.548553e-17 | -1.480301e-17 | -8.340641e-17 | 1.838694e-16 | -2.681072e-16 | 1.870916e-16 | -1.443350e-16 | -0.00051 | 0.000105 | -0.000049 | -0.000055 | -0.000027 | -0.000113 | -0.000011 | -0.000028 | -0.000117 | -6.308277e-17 | -0.000085 | -0.000069 | -0.000107 | 0.000015 | -0.000031 | -0.000042 | 9.504357e-15 | -6.459051e-17 | -1.301681e-16 | -1.933703e-16 | -3.696746e-17 | 1.681898e-19 | -6.751741e-19 | 4.477259e-19 | -7.269816e-20 | 4.045474e-19 | 1.223637e-18 | 1.075851e-18 | -1.593793e-18 | 1.216670e-18 | 2.723570e-19 | -4.703521e-19 | 3.232220e-19 | 7.222399e-21 | 5.820028e-21 | 5.370710e-21 | 2.005591e-20 | 1.115431e-19 |

| 3 | -0.001153 | -0.000651 | -0.000559 | -0.000524 | 0.00003 | 0.000357 | -0.000339 | -0.000428 | -0.000424 | -0.000372 | -0.000382 | -9.229732e-18 | 2.727819e-15 | -0.000481 | -0.000306 | 0.000033 | -2.245254e-16 | -2.029954e-17 | -1.117705e-17 | -8.220052e-17 | 5.707972e-17 | -0.000624 | -3.630915e-18 | 1.008721e-16 | 8.512500e-19 | 4.287588e-18 | -4.577265e-18 | -8.689851e-19 | -1.644222e-17 | 4.959985e-17 | -1.224857e-16 | -0.00051 | 0.000105 | -0.000049 | -0.000055 | -0.000027 | -0.000113 | -0.000011 | -0.000028 | -0.000117 | -2.502096e-17 | -0.000085 | -0.000069 | -0.000107 | 0.000015 | -0.000031 | -0.000042 | -3.722096e-18 | -1.397288e-18 | 2.365451e-19 | 5.917212e-21 | -1.645157e-19 | -4.078526e-21 | -4.933178e-20 | 2.974763e-20 | -5.485142e-20 | -1.298604e-19 | -6.587155e-20 | -1.116076e-19 | 1.338942e-19 | 8.165997e-20 | 1.384465e-19 | -1.084071e-20 | 9.970619e-21 | -4.728920e-20 | -4.616010e-20 | -2.324160e-20 | -6.416898e-20 | -3.414129e-20 |

| 4 | -0.001153 | -0.000651 | -0.000559 | -0.000524 | 0.00003 | 0.000357 | -0.000339 | -0.000428 | -0.000424 | -0.000372 | -0.000382 | 1.769313e-18 | 2.735146e-15 | -0.000481 | -0.000306 | 0.000033 | -1.948217e-16 | -3.447135e-17 | -4.074711e-17 | -7.168826e-17 | 4.512882e-17 | -0.000624 | 1.529291e-17 | 1.304296e-16 | -1.218269e-18 | 6.169002e-18 | -1.717860e-17 | -2.588438e-17 | -2.077230e-17 | 3.981505e-17 | -1.113311e-16 | -0.00051 | 0.000105 | -0.000049 | -0.000055 | -0.000027 | -0.000113 | -0.000011 | -0.000028 | -0.000117 | -2.206909e-17 | -0.000085 | -0.000069 | -0.000107 | 0.000015 | -0.000031 | -0.000042 | -2.521881e-17 | -1.442576e-17 | -1.046305e-16 | 3.904241e-17 | 1.555701e-17 | 1.626597e-17 | 2.837998e-17 | 1.654359e-18 | -5.697258e-19 | 1.987760e-17 | 8.221763e-18 | 1.752222e-17 | -1.942312e-17 | -1.099805e-18 | 3.031280e-17 | -4.952675e-21 | -1.094244e-19 | -2.285040e-20 | -3.140286e-20 | -8.951914e-21 | -3.201393e-20 | 9.188216e-19 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |